This is the multi-page printable view of this section. Click here to print.

Production Guide

- 1: Installation

- 2: Upgrading

- 2.1: Upgrade to v0.15.0

- 2.2: Upgrade to v0.14.0

- 2.3: Upgrade to v0.13.7

- 2.4: Upgrade to v0.13.6

- 2.5: Upgrade to v0.10.6

- 2.6: Management Console Upgrades

- 2.7: Migrate to Data Model V1

- 2.8: Archive

- 2.8.1: Upgrade to v0.9.34

- 2.8.2: Upgrade to v0.9.15

- 2.8.3: Upgrade to v0.9.14

- 2.8.4: Upgrade to v0.9.13

- 2.8.5: Upgrade to v0.9.12

- 2.8.6: Upgrade to v0.9.11

- 2.8.7: Upgrade to v0.9.10

- 2.8.8: Upgrade to v0.9.9

- 2.8.9: Upgrade to v0.9.8

- 2.8.10: Upgrade to v0.9.7

- 2.8.11: Upgrade to v0.9.6

- 2.8.12: Upgrade to v0.9.5

- 2.8.13: Upgrade to v0.9.4

- 3: Administration

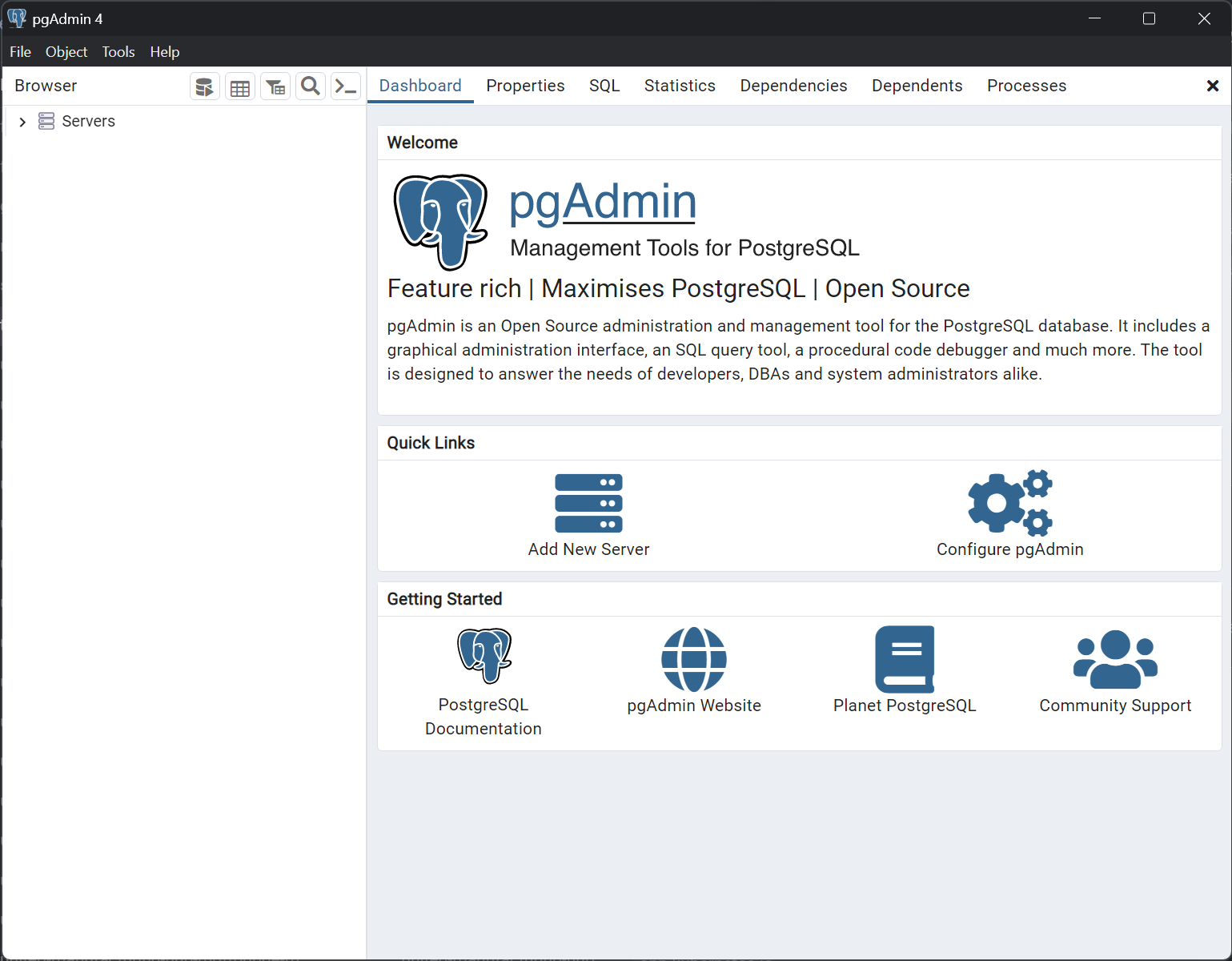

- 3.1: Access the Database

- 3.2: Access Services From Within the Cluster

- 3.3: Access Services Outside the Cluster

- 3.4: Expose Grafana to the Internet

- 3.5: Install Custom Drivers in NodeRed

- 3.6: Execute Kafka Shell Scripts

- 3.7: Reduce database size

- 3.8: Use Merge Point To Normalize Kafka Topics

- 3.9: Delete Assets from the Database

- 3.10: Change the Language in Factoryinsight

- 3.11: Explore Cached Data

- 4: Backup & Recovery

- 4.1: Backup and Restore the United Manufacturing Hub

- 4.2: Backup and Restore Database

- 4.3: Import and Export Node-RED Flows

- 5: Security

1 - Installation

Learn how to install the United Manufacturing Hub using completely Free and Open Source Software.

1.1 - Advanced Installation

This page describes advanced requirements and considerations when installing the United Manufacturing Hub. It is meant to be an additional guide for the Installation guide page.

You may also find these step-by-step installation guides helpful:

Storage Configuration

When setting up your virtual machine for UMH:

Partition Layout

- The default Rocky Linux installation typically creates a large

/homepartition - During OS installation, you may use custom partitioning to ensure the root partition (

/) has sufficient space ()

Install location

- The kubernetes data directory is stored in

/var/lib/rancher/k3sby default. - This can be overridden by setting the

K3S_DATA_DIRenvironment variable. See Kubernetes Configuration Variables for more information.

Virtual Machine Configuration

There are some specific settings that are recommended for the virtual machine:

Networking

- Use a bridged network adapter

- NAT or host-only adapters will prevent external connections to your instance

- The VM must be able to:

- Access the internet for installation and updates

- Communicate with other devices on your network

- Accept incoming connections from clients

Advanced Requirements

CPU Configuration

- Enable CPU virtualization extensions (VT-x/AMD-V) on the host

- Allocate physical CPU cores rather than virtual cores when possible

- For production environments, reserve the CPU resources

Memory Settings

- Use static memory allocation rather than dynamic/balloon drivers

- Reserve the memory in the hypervisor to prevent memory swapping

Other Settings

- Disable unnecessary virtual hardware (like sound cards, USB controllers)

- Use paravirtualized drivers when available for better performance

General Script Control Variables

For advanced users, the following variables can be used to control the behavior of the script:

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

AUTO_SELECT_EXTERNAL_IP | auto_select_external_ip | false | If true, automatically pick the first non-loopback network interface and set EXTERNAL_IP/EXTERNAL_IP_INTERFACE/EXTERNAL_IP_GATEWAY based on it. |

GENERAL_IGNORE_ERRORS | ignore_errors | false | If true, the script will ignore errors and continue, mainly used for troubleshooting. |

GENERAL_TIMEOUT | TIMEOUT | 120 | Time in seconds to wait for a process before timing out (default: 2 minutes). |

GENERAL_INTERVAL | INTERVAL | 5 | Interval in seconds to check a process status (default: every 5 seconds). |

GENERAL_RETRIES | RETRIES | 10 | Number of retries for certain checks (default: 10). |

UNINSTALL | UNINSTALL | false | If true, the script will uninstall the UMH, Companion, and Kubernetes instead of installing them. |

EXTERNAL_IP | EXTERNAL_IP | (unset) | Manually set the external IP of the machine. |

EXTERNAL_IP_INTERFACE | EXTERNAL_IP_INTERFACE | (unset) | Manually set the network interface associated with EXTERNAL_IP. |

EXTERNAL_IP_GATEWAY | EXTERNAL_IP_GATEWAY | (unset) | Manually set the default gateway associated with EXTERNAL_IP_INTERFACE. |

DNS_SERVER | DNS_SERVER | (unset) | Manually set the DNS server to be used by mgmtcompanion. |

Tooling Installation Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

TOOL_K3S_VERSION | INSTALL_K3S_VERSION | v1.29.5+k3s1 | Specifies the version of k3s to be installed. |

TOOL_K3S_SELINUX_VERSION | INSTALL_K3S_SELINUX_VERSION | k3s-selinux-1.5-1.el9.noarch.rpm | Specifies the version (or RPM) of k3s SELinux policy to install on RHEL systems. |

TOOL_KUBECTL_VERSION | INSTALL_KUBECTL_VERSION | v1.29.5 | Specifies the version of kubectl to be installed. |

TOOL_HELM_VERSION | INSTALL_HELM_VERSION | v3.13.2 | Specifies the version of helm to be installed. |

TOOL_K3S_CUSTOM_TLS_SAN | CUSTOM_TLS_SAN | (unset) | Custom TLS Subject Alternative Names for k3s. For example, IP addresses or a domain name for TLS certificates. |

TOOL_K3S_KUBECONFIG | KUBECONFIG | /etc/rancher/k3s/k3s.yaml | Path to the kubeconfig file to be used by kubectl, helm, etc. |

Kubernetes Configuration Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

KUBE_NODE_NAME | NODE_NAME | Uses the system’s hostname | Node name in the Kubernetes cluster. |

AUTO_PATCH_HOSTNAME | auto_patch_hostname | false | If true, automatically sets the system hostname to NODE_NAME without user confirmation. |

| (Set internally) | INSTALL_K3S_EXEC | (constructed dynamically) | Internal variable to pass additional flags (like --tls-san) during k3s installation. This is auto-generated; not usually set directly. |

K3S_DATA_DIR | K3S_DATA_DIR | k3s default (/var/lib/rancher/k3s or ${HOME}/.rancher/k3s if not root) | Specifies the data directory for k3s by setting the --data-dir flag. |

Speedtest Configuration Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

SPEEDTEST_MIN_DOWNLOAD | MIN_DOWNLOAD | 50000000 | Minimum download speed in bits per second (default: 50 Mbps). |

SPEEDTEST_MIN_UPLOAD | MIN_UPLOAD | 10000000 | Minimum upload speed in bits per second (default: 10 Mbps). |

SPEEDTEST_MIN_PING | MIN_PING | 50 | Maximum ping in milliseconds (default: 50). |

| (Script-internal) | MEASURED_UPLOAD | 0 | Automatically set by script after running speed test; not typically set by the user. |

| (Script-internal) | MEASURED_DOWNLOAD | 0 | Automatically set by script after running speed test; not typically set by the user. |

| (Script-internal) | MEASURED_PING | 0 | Automatically set by script after running speed test; not typically set by the user. |

UMH Installation Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

UMH_SKIP_INSTALL | skip_umh_install | false | If true, skips the installation of the United Manufacturing Hub (UMH). |

UMH_FORCE_OVERWRITE | force_umh_overwrite | false | If true, forces overwriting an existing UMH installation without prompting the user. |

UMH_VERSION | UMH_VERSION | 0.27.0 | Specifies which version of the UMH chart to install. |

UMH_HELM_CHART_URL | UMH_HELM_CHART_URL | https://management.umh.app/helm/umh | The URL of the UMH Helm chart repository. |

ALLOW_HELM_PRERELEASE | ALLOW_HELM_PRERELEASE | false | If true, allows Helm to install pre-release versions of UMH. |

IS_LITE_INSTALL | IS_LITE_INSTALL | false | If true, installs a “lite” version of UMH; forcibly sets skip_umh_install to true. |

IS_FULL_INSTALL | IS_FULL_INSTALL | true | True if installing the “full” version of UMH. Note that it becomes false if IS_LITE_INSTALL is true. |

Management Companion Installation Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

MGMT_AUTH_TOKEN | AUTH_TOKEN | (unset) | Required. A 64-character hex string (256-bit) used by the Management Companion for authentication. |

MGMT_INSECURE_TLS_SKIP_CERTIFICATE_CHECK | insecure_tls_skip_certificate_check | false | If true, skip TLS certificate checks. Useful in development or if you have a proxy intercepting traffic. |

MGMT_CUSTOM_URL | CUSTOM_MGMT_URL | https://management.umh.app | A custom management URL for specialized setups. |

MGMT_CUSTOM_IMAGE_VERSION | IMAGE_VERSION | latest | Custom version tag for the Management Companion Docker image (e.g., staging). |

MGMT_SKIP_INSTALL | skip_companion_install | false | If true, skips the installation of the Management Companion. |

MGMT_SKIP_RHEL_SHA1_SIGNATURES | SKIP_RHEL_SHA1_SIGNATURES | false | On RHEL-based systems, if true, does not temporarily enable SHA1 signatures (needed for some older packages). |

MGMT_CONFIG_DEBUG_DISABLE_BACKEND_CONNECTION | MGMT_CONFIG_DEBUG_DISABLE_BACKEND_CONNECTION | false | Disables the backend connection for debugging. |

MGMT_API_URL | API_URL | $CUSTOM_MGMT_URL/api | API endpoint used by the Management Companion to communicate with the UMH backend. |

MGMT_STATIC_URL | STATIC_URL | $CUSTOM_MGMT_URL/static | URL for static resources (Kubernetes YAML files, etc.). |

MGMT_CONFIGMAP_URL | CONFIGMAP_URL | $STATIC_URL/kubernetes/configmap.yaml | Location of the companion’s ConfigMap YAML. |

MGMT_SECRET_URL | SECRET_URL | $STATIC_URL/kubernetes/secret.yaml | Location of the companion’s Secret YAML. |

MGMT_STATEFULSET_URL | STATEFULSET_URL | $STATIC_URL/kubernetes/statefulset.yaml | Location of the companion’s StatefulSet YAML. |

MGMT_ROLE_URL | ROLE_URL | $STATIC_URL/kubernetes/role.yaml | Location of the companion’s Role YAML. |

MGMT_ROLE_BINDING_URL | ROLE_BINDING_URL | $STATIC_URL/kubernetes/rolebinding.yaml | Location of the companion’s RoleBinding YAML. |

MGMT_PVC_URL | PVC_URL | $STATIC_URL/kubernetes/pvc.yaml | Location of the companion’s PVC YAML. |

MGMT_KUBELET_URL | KUBELET_URL | $STATIC_URL/kubernetes/kubelet-config.yaml | Location of the Kubelet configuration YAML. |

MGMT_REGISTRIES_URL | REGISTRIES_URL | $STATIC_URL/kubernetes/registries.yaml | Custom Docker registry configuration for k3s. |

Location Variables

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

LOCATION_ENTERPRISE | LOCATION_ENTERPRISE | (unset) | Enterprise name/location for site identification. |

LOCATION_SITE | LOCATION_SITE | (unset) | Site name/location for site identification. |

LOCATION_AREA | LOCATION_AREA | (unset) | Area name/location for site identification. |

LOCATION_LINE | LOCATION_LINE | (unset) | Production line name/location for site identification. |

LOCATION_WORKCELL | LOCATION_WORKCELL | (unset) | Workcell name/location for site identification. |

Additional Installation Options

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

SILENT | SILENT | false | If true, the script proceeds without interactive confirmations (all prompts default to “yes”). |

SKIP_HARDWARE_TEST | SKIP_HARDWARE_TEST | false | If true, skip hardware checks (CPU cores, memory, disk space, SSE4.2). Useful for dev or limited resource machines. |

DISABLE_HARDWARE_STATUS_CHECK | DISABLE_HARDWARE_STATUS_CHECK | false | If true, skip or ignore hardware checks in the status messages. |

Release Channel

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

RELEASE_CHANNEL | RELEASE_CHANNEL | stable | Channel to use for UMH updates/notifications. Options: enterprise, stable, nightly |

Helm Flags

| Environment Variable | Script Variable | Default Value | Description |

|---|---|---|---|

| (Set internally) | CHART_VERSION | UMH_VERSION | Used by the script to pass --version to Helm when installing the UMH chart. |

SAME_TOPIC_EXPERIENCE | SAME_TOPIC_EXPERIENCE | false | If true, uses the same Kafka topic across certain analytics solutions (internal optional feature). |

Environment Variables for Testing and Troubleshooting

These variables let you run only portions of the script for debugging:

| Environment Variable | Default | Description |

|---|---|---|

INSTALL_HELM | false | If true, only executes the Helm installation portion of the script. |

UNINSTALL_HELM | false | If true, only executes the Helm uninstallation portion of the script. |

INSTALL_KUBECTL | false | If true, only executes the kubectl installation portion of the script. |

UNINSTALL_KUBECTL | false | If true, only executes the kubectl uninstallation portion of the script. |

INSTALL_KUBERNETES | false | If true, only installs k3s (and does pre-checks). |

UNINSTALL_KUBERNETES | false | If true, only uninstalls k3s (and does relevant cleanup). |

Other Internal Variables

Below variables are set or used internally and typically do not need to be overridden:

TMP_FILE_BASE_DIR(Defaults to/tmp, used to specify base directory for temporary files)SENTRY_DSN(Used for error reporting to Sentry)INSTALL_HELM/UNINSTALL_HELM(See testing & troubleshooting table)INSTALL_KUBECTL/UNINSTALL_KUBECTL(See testing & troubleshooting table)INSTALL_KUBERNETES/UNINSTALL_KUBERNETES(See testing & troubleshooting table)

How to Override Variables

To override any variable, simply export it before running the script, or prepend it on the command line. For example:

export UMH_VERSION="0.28.1"

export MGMT_AUTH_TOKEN="0123456789abcdef..."

export SILENT="true"

./install.sh

Or in a single line:

export UMH_VERSION="0.28.1" && export MGMT_AUTH_TOKEN="0123456789abcdef..." && export SILENT="true" && ./install.sh

1.2 - Flatcar Installation

Here is a step-by-step guide on how to deploy the United Manufacturing Hub on Flatcar Linux, a Linux distribution designed for container workloads with high security and low maintenance. This will leverage the UMH Device and Container Infrastructure.

The system can be installed either bare metal or in a virtual machine.

Before you begin

Ensure your system meets these minimum requirements:

- 4-core CPU

- 8 GB system RAM

- 32 GB available disk space

- Internet access

You will also need the latest version of the iPXE boot image, suitable for your system:

- ipxe-x86_64-efi: For modern systems, recommended for virtual machines.

- ipxe-x86_64-bios: For legacy systems.

- ipxe-arm64-efi: For ARM architectures (Note: Raspberry Pi 4 is currently not supported).

For bare metal installations, flash the image to a USB stick with at least 4 GB of storage. Our guide on flashing an operating system to a USB stick can assist you.

For virtual machines, ensure UEFI boot is enabled when creating the VM.

Lastly, ensure you are on the same network as the device for SSH access post-installation.

System Preparation and Booting from iPXE

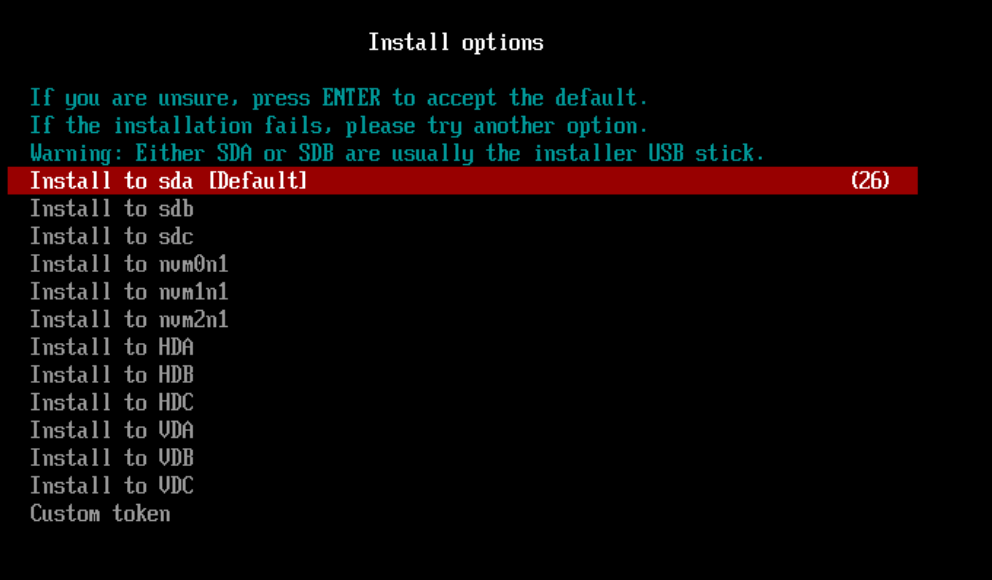

Identify the drive for Flatcar Linux installation. For virtual machines, this is typically sda. For bare metal, the drive depends on your physical storage. The troubleshooting section can help identify the correct drive.

Boot your device from the iPXE image. Consult your device or hypervisor documentation for booting instructions.

You can find a comprehensive guide on how to configure a virtual machine in Proxmox for installing Flatcar Linux on the Learning Hub.

Installation

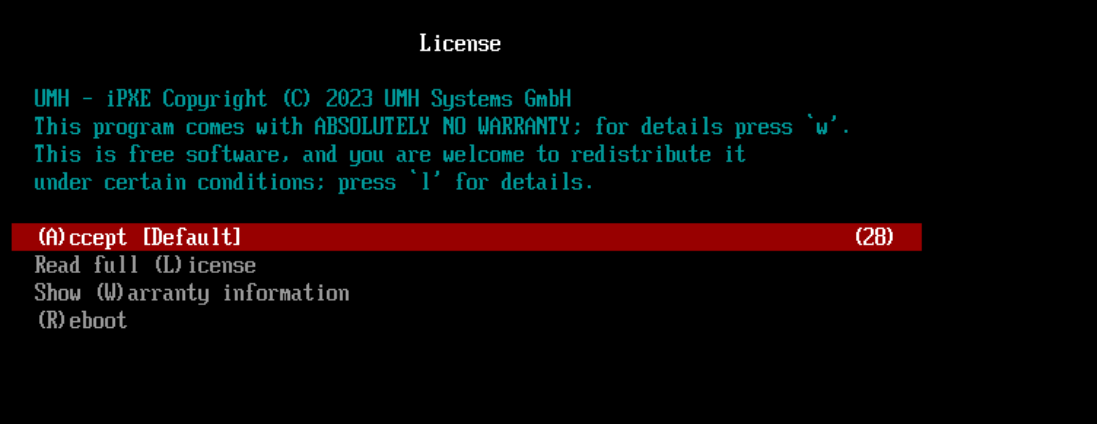

At the first prompt, read and accept the license to proceed.

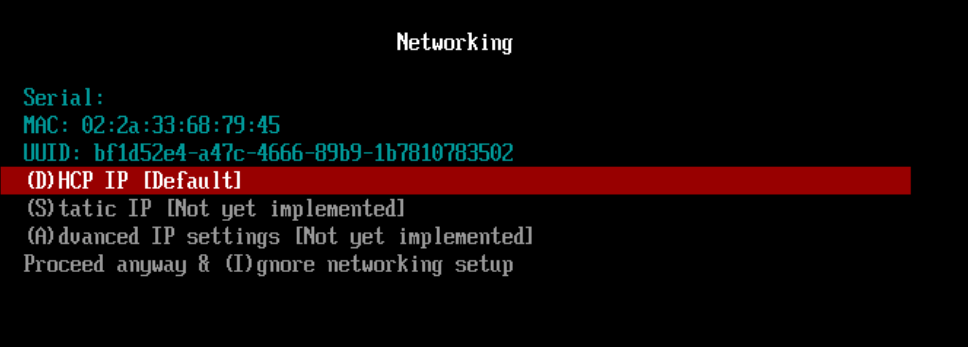

Next, configure your network settings. Select DHCP if uncertain.

The connection will be tested next. If it fails, revisit the network settings.

Ensure your device has internet access and no firewalls are blocking the connection.

Then, select the drive for Flatcar Linux installation.

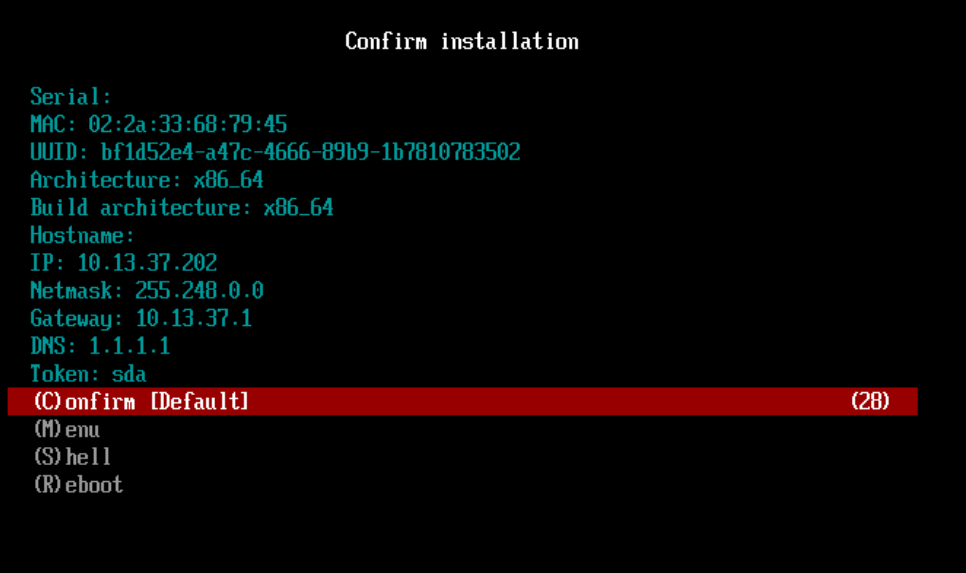

A summary of the installation will appear. Check that everything is correct and confirm to start the process.

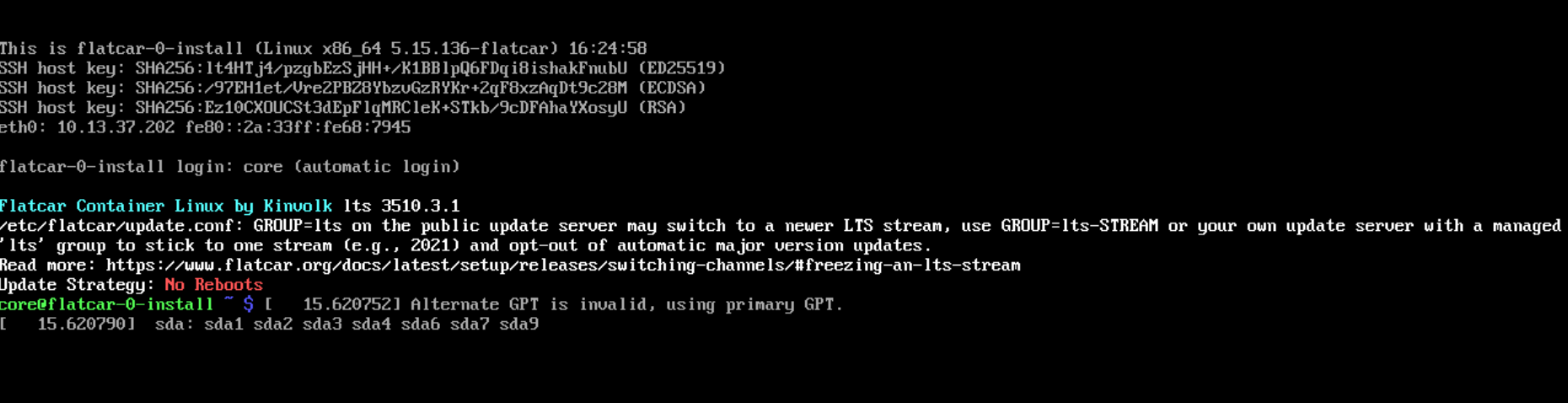

Shortly after, you’ll see a green command line core@flatcar-0-install. Remove

the USB stick or the CD drive from the VM. The system will continue processing.

The installation will complete after a few minutes, and the system will reboot.

When you see the green core@flatcar-1-umh login prompt, the installation is complete, and the device’s IP address will be displayed.

Installation time varies based on network speed and system performance.

Connect to the Device

With the system installed, access it via SSH.

For Windows 11 users, the default Windows Terminal is recommended. For other OS users, try MobaXTerm.

To do so, open you terminal of choice. We recommend the default Windows Terminal, or MobaXTerm if you are not on Windows 11.

Connect to the device using this command, substituting <ip-address> with your

device’s IP address:

ssh core@<ip-address>

When prompted, enter the default password for the core user: umh.

Troubleshooting

The Installation Stops at the First Green Login Prompt

If the installation halts at the first green login prompt, check the installation status with:

systemctl status installer

A typical response for an ongoing installation will look like this:

● installer.service - Flatcar Linux Installer

Loaded: loaded (/usr/lib/systemd/system/installer.service; static; vendor preset: enabled)

Active: active (running) since Wed 2021-05-12 14:00:00 UTC; 1min 30s ago

If the status differs, the installation may have failed. Review the logs to identify the issue.

Unsure Which Drive to Select

To determine the correct drive, refer to your device’s manual:

- SATA drives (HDD or SSD): Typically labeled as

sda. - NVMe drives: Usually labeled as

nvm0n1.

For further verification, boot any Linux distribution on your device and execute:

lsblk

The output, resembling the following, will help identify the drive:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 223.6G 0 disk

├─sda1 8:1 0 512M 0 part /boot

└─sda2 8:2 0 223.1G 0 part /

sdb 8:0 0 31.8G 0 disk

└─sdb1 8:1 0 31.8G 0 part /mnt/usb

In most cases, the correct drive is the first listed or the one not matching the USB stick size.

No Resources in the Cluster

If you can access the cluster but see no resources, SSH into the edge device and check the cluster status:

systemctl status k3s

If the status is not active (running), the cluster isn’t operational. Restart it with:

sudo systemctl restart k3s

If the cluster is active or restarting doesn’t resolve the issue, inspect the installation logs:

systemctl status umh-install

systemctl status helm-install

Persistent errors may necessitate a system reinstallation.

I can’t SSH into the virtual machine

Ensure that your computer is on the same network as the virtual machine, with no firewalls or VPNs blocking the connection.

What’s next

- You can follow the Getting Started guide to get familiar with the UMH stack.

- If you already know your way around the United Manufacturing Hub, you can follow the Administration guides to configure the stack for production.

2 - Upgrading

2.1 - Upgrade to v0.15.0

This page describes how to upgrade the United Manufacturing Hub from version 0.14.0 to 0.15.0. Before upgrading, remember to back up the database, Node-RED flows, and your cluster configuration.

Upgrade Helm Chart

Upgrade the Helm chart to the 0.15.0 version:

bash <(curl -s https://management.umh.app/binaries/umh/migrations/0_15_0.sh)

Troubleshooting

If for some reason the upgrade fails, you can delete the deployment and statefulsets and try again: This will not delete your data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment \

united-manufacturing-hub-factoryinsight-deployment \

united-manufacturing-hub-iotsensorsmqtt \

united-manufacturing-hub-opcuasimulator-deployment \

united-manufacturing-hub-packmlmqttsimulator \

united-manufacturing-hub-mqttkafkabridge \

united-manufacturing-hub-kafkatopostgresqlv2 \

united-manufacturing-hub-kafkatopostgresql \

united-manufacturing-hub-grafana \

united-manufacturing-hub-databridge-0 \

united-manufacturing-hub-console

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset \

united-manufacturing-hub-hivemqce \

united-manufacturing-hub-kafka \

united-manufacturing-hub-nodered \

united-manufacturing-hub-sensorconnect \

united-manufacturing-hub-mqttbridge \

united-manufacturing-hub-timescaledb \

united-manufacturing-hub-redis-master

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete jobs \

united-manufacturing-hub-kafka-configuration

2.2 - Upgrade to v0.14.0

This page describes how to upgrade the United Manufacturing Hub from version 0.13.6 to 0.14.0. Before upgrading, remember to back up the database, Node-RED flows, and your cluster configuration.

Upgrade Helm Chart

Upgrade the Helm chart to the 0.14.0 version:

bash <(curl -s https://management.umh.app/binaries/umh/migrations/0_14_0.sh)

Troubleshooting

If for some reason the upgrade fails, you can delete the deployment and statefulsets and try again: This will not delete your data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment \

united-manufacturing-hub-factoryinsight-deployment \

united-manufacturing-hub-iotsensorsmqtt \

united-manufacturing-hub-opcuasimulator-deployment \

united-manufacturing-hub-packmlmqttsimulator \

united-manufacturing-hub-mqttkafkabridge \

united-manufacturing-hub-kafkatopostgresqlv2 \

united-manufacturing-hub-kafkatopostgresql \

united-manufacturing-hub-grafana \

united-manufacturing-hub-databridge-0 \

united-manufacturing-hub-console

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset \

united-manufacturing-hub-hivemqce \

united-manufacturing-hub-kafka \

united-manufacturing-hub-nodered \

united-manufacturing-hub-sensorconnect \

united-manufacturing-hub-mqttbridge \

united-manufacturing-hub-timescaledb \

united-manufacturing-hub-redis-master

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete jobs \

united-manufacturing-hub-kafka-configuration

2.3 - Upgrade to v0.13.7

This page describes how to upgrade the United Manufacturing Hub from version 0.13.6 to 0.13.7. Before upgrading, remember to back up the database, Node-RED flows, and your cluster configuration.

Upgrade Helm Chart

Upgrade the Helm chart to the 0.13.7 version:

bash <(curl -s https://management.umh.app/binaries/umh/migrations/0_13_7.sh)

Troubleshooting

If for some reason the upgrade fails, you can delete the deployment and statefulsets and try again: This will not delete your data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment \

united-manufacturing-hub-factoryinsight-deployment \

united-manufacturing-hub-iotsensorsmqtt \

united-manufacturing-hub-opcuasimulator-deployment \

united-manufacturing-hub-packmlmqttsimulator \

united-manufacturing-hub-mqttkafkabridge \

united-manufacturing-hub-kafkatopostgresqlv2 \

united-manufacturing-hub-kafkatopostgresql \

united-manufacturing-hub-grafana \

united-manufacturing-hub-databridge-0 \

united-manufacturing-hub-console

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset \

united-manufacturing-hub-hivemqce \

united-manufacturing-hub-kafka \

united-manufacturing-hub-nodered \

united-manufacturing-hub-sensorconnect \

united-manufacturing-hub-mqttbridge \

united-manufacturing-hub-timescaledb \

united-manufacturing-hub-redis-master

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete jobs \

united-manufacturing-hub-kafka-configuration

2.4 - Upgrade to v0.13.6

This page describes how to upgrade the United Manufacturing Hub to version 0.13.6. Before upgrading, remember to back up the database, Node-RED flows, and your cluster configuration.

Upgrade Helm Chart

Upgrade the Helm chart to the 0.13.6 version:

bash <(curl -s https://management.umh.app/binaries/umh/migrations/0_13_6.sh)

Troubleshooting

If for some reason the upgrade fails, you can delete the deployment and statefulsets and try again: This will not delete your data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment \

united-manufacturing-hub-factoryinsight-deployment \

united-manufacturing-hub-iotsensorsmqtt \

united-manufacturing-hub-opcuasimulator-deployment \

united-manufacturing-hub-packmlmqttsimulator \

united-manufacturing-hub-mqttkafkabridge \

united-manufacturing-hub-kafkatopostgresqlv2 \

united-manufacturing-hub-kafkatopostgresql \

united-manufacturing-hub-grafana \

united-manufacturing-hub-databridge-0 \

united-manufacturing-hub-console

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset \

united-manufacturing-hub-hivemqce \

united-manufacturing-hub-kafka \

united-manufacturing-hub-nodered \

united-manufacturing-hub-sensorconnect \

united-manufacturing-hub-mqttbridge \

united-manufacturing-hub-timescaledb \

united-manufacturing-hub-redis-master

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete jobs \

united-manufacturing-hub-kafka-configuration

2.5 - Upgrade to v0.10.6

This page describes how to upgrade the United Manufacturing Hub to version 0.10.6. Before upgrading, remember to back up the database, Node-RED flows, and your cluster configuration.

All the following commands are to be run from the UMH instance’s shell.

Update Helm Repo

Fetch the latest Helm charts from the UMH repository:

sudo $(which helm) repo update --kubeconfig /etc/rancher/k3s/k3s.yaml

Upgrade Helm Chart

Upgrade the Helm chart to the 0.10.6 version:

sudo $(which helm) upgrade united-manufacturing-hub united-manufacturing-hub/united-manufacturing-hub -n united-manufacturing-hub --version 0.10.6 --reuse-values --kubeconfig /etc/rancher/k3s/k3s.yaml \

--set _000_commonConfig.infrastructure.mqtt.tls.factoryinput=null \

--set _000_commonConfig.datainput=null \

--set _000_commonConfig.mqttBridge=null \

--set _000_commonConfig.mqttBridge=null \

--set mqttbridge=null \

--set factoryinput=null \

--set grafanaproxy=null \

--set kafkastatedetector.image.repository=management.umh.app/oci/united-manufacturing-hub/kafkastatedetector \

--set barcodereader.image.repository=management.umh.app/oci/united-manufacturing-hub/barcodereader \

--set sensorconnect.image=management.umh.app/oci/united-manufacturing-hub/sensorconnect \

--set iotsensorsmqtt.image=management.umh.app/oci/amineamaach/sensors-mqtt \

--set opcuasimulator.image=management.umh.app/oci/united-manufacturing-hub/opcuasimulator \

--set kafkabridge.image.repository=management.umh.app/oci/united-manufacturing-hub/kafka-bridge \

--set kafkabridge.initContainer.repository=management.umh.app/oci/united-manufacturing-hub/kafka-init \

--set factoryinsight.image.repository=management.umh.app/oci/united-manufacturing-hub/factoryinsight \

--set kafkatopostgresql.image.repository=management.umh.app/oci/united-manufacturing-hub/kafka-to-postgresql \

--set kafkatopostgresql.initContainer.repository=management.umh.app/oci/united-manufacturing-hub/kafka-init \

--set timescaledb-single.image.repository=management.umh.app/oci/timescale/timescaledb-ha \

--set timescaledb-single.prometheus.image.repository=management.umh.app/oci/prometheuscommunity/postgres-exporter \

--set grafana.image.repository=management.umh.app/oci/grafana/grafana \

--set grafana.downloadDashboardsImage.repository=management.umh.app/oci/curlimages/curl \

--set grafana.testFramework.image=management.umh.app/oci/bats/bats \

--set grafana.initChownData.image.repository=management.umh.app/oci/library/busybox \

--set grafana.sidecar.image.repository=management.umh.app/oci/kiwigrid/k8s-sidecar \

--set grafana.imageRenderer.image.repository=management.umh.app/oci/grafana/grafana-image-renderer \

--set packmlmqttsimulator.image.repository=management.umh.app/oci/spruiktec/packml-simulator \

--set tulipconnector.image.repository=management.umh.app/oci/united-manufacturing-hub/tulip-connector \

--set mqttkafkabridge.image.repository=management.umh.app/oci/united-manufacturing-hub/mqtt-kafka-bridge \

--set mqttkafkabridge.initContainer.repository=management.umh.app/oci/united-manufacturing-hub/kafka-init \

--set kafkatoblob.image.repository=management.umh.app/oci/united-manufacturing-hub/kafka-to-blob \

--set redpanda.image.repository=management.umh.app/oci/redpandadata/redpanda \

--set redpanda.statefulset.initContainerImage.repository=management.umh.app/oci/library/busybox \

--set redpanda.console.image.registry=management.umh.app/oci \

--set redis.image.registry=management.umh.app/oci \

--set redis.metrics.image.registry=management.umh.app/oci \

--set redis.sentinel.image.registry=management.umh.app/oci \

--set redis.volumePermissions.image.registry=management.umh.app/oci \

--set redis.sysctl.image.registry=management.umh.app/oci \

--set mqtt_broker.image.repository=management.umh.app/oci/hivemq/hivemq-ce \

--set mqtt_broker.initContainer.hivemqextensioninit.image.repository=management.umh.app/oci/united-manufacturing-hub/hivemq-init \

--set metrics.image.repository=management.umh.app/oci/united-manufacturing-hub/metrics \

--set databridge.image.repository=management.umh.app/oci/united-manufacturing-hub/databridge \

--set kafkatopostgresqlv2.image.repository=management.umh.app/oci/united-manufacturing-hub/kafka-to-postgresql-v2

Manual steps (optional)

Due to a limitation of Helm, we cannot automatically set grafana.env.GF_PLUGINS_ALLOW_LOADING_UNSIGNED_PLUGINS=umh-datasource,umh-v2-datasource.

You could either ignore this (if your network is not restricuted to a single domain) or set it manually in the Grafana deployment.

We are also not able to manually overwrite grafana.extraInitContainers[0].image=management.umh.app/oci/united-manufacturing-hub/grafana-umh.

You could either ignore this (if your network is not restricuted to a single domain) or set it manually in the Grafana deployment.

Host system

Open the /var/lib/rancher/k3s/agent/etc/containerd/config.toml.tmpl using vi as root and add the following lines:

version = 2

[plugins."io.containerd.internal.v1.opt"]

path = "/var/lib/rancher/k3s/agent/containerd"

[plugins."io.containerd.grpc.v1.cri"]

stream_server_address = "127.0.0.1"

stream_server_port = "10010"

enable_selinux = false

enable_unprivileged_ports = true

enable_unprivileged_icmp = true

sandbox_image = "management.umh.app/v2/rancher/mirrored-pause:3.6"

[plugins."io.containerd.grpc.v1.cri".containerd]

snapshotter = "overlayfs"

disable_snapshot_annotations = true

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/var/lib/rancher/k3s/data/ab2055bc72380bad965b219e8688ac02b2e1b665cad6bdde1f8f087637aa81df/bin"

conf_dir = "/var/lib/rancher/k3s/agent/etc/cni/net.d"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

# Mirror configuration for Docker Hub with fallback

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://management.umh.app/oci", "https://registry-1.docker.io"]

# Mirror configuration for GitHub Container Registry with fallback

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."ghcr.io"]

endpoint = ["https://management.umh.app/oci", "https://ghcr.io"]

# Mirror configuration for Quay with fallback

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]

endpoint = ["https://management.umh.app/oci", "https://quay.io"]

# Catch-all configuration for any other registries

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."*"]

endpoint = ["https://management.umh.app/oci"]

Open /etc/flatcar/update.conf using vi as root and add the following lines:

GROUP=stable

SERVER=https://management.umh.app/nebraska/

Restart k3s or reboot the host system:

sudo systemctl restart k3s

Troubleshooting

If for some reason the upgrade fails, you can delete the deployment and statefulsets and try again: This will not delete your data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment \

united-manufacturing-hub-factoryinsight-deployment \

united-manufacturing-hub-iotsensorsmqtt \

united-manufacturing-hub-opcuasimulator-deployment \

united-manufacturing-hub-packmlmqttsimulator \

united-manufacturing-hub-mqttkafkabridge \

united-manufacturing-hub-kafkatopostgresqlv2 \

united-manufacturing-hub-kafkatopostgresql \

united-manufacturing-hub-grafana \

united-manufacturing-hub-databridge-0 \

united-manufacturing-hub-console

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset \

united-manufacturing-hub-hivemqce \

united-manufacturing-hub-kafka \

united-manufacturing-hub-nodered \

united-manufacturing-hub-sensorconnect \

united-manufacturing-hub-mqttbridge \

united-manufacturing-hub-timescaledb \

united-manufacturing-hub-redis-master

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete jobs \

united-manufacturing-hub-kafka-configuration

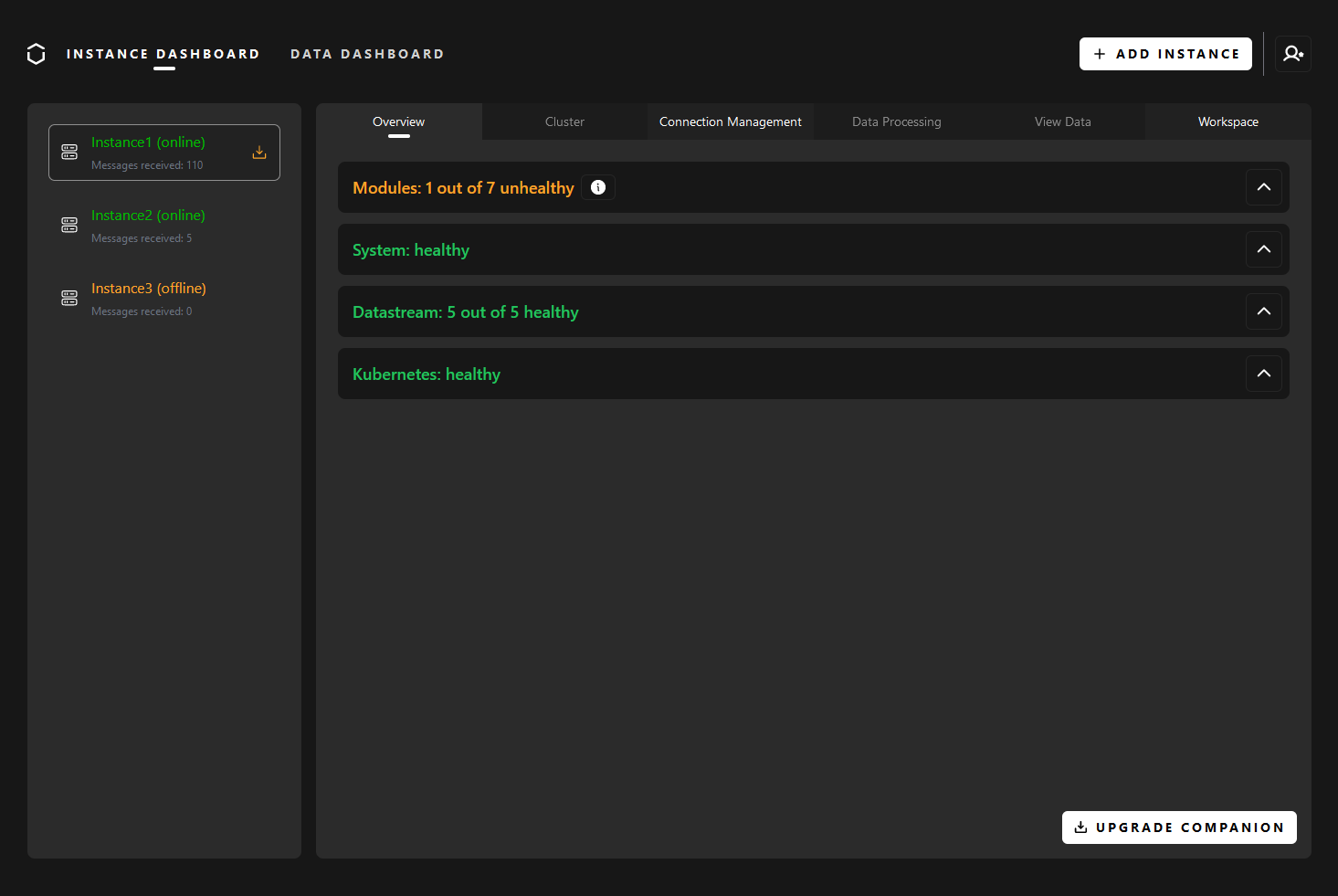

2.6 - Management Console Upgrades

Easily upgrade your UMH instance with the Management Console. This page offers clear, step-by-step instructions for a smooth upgrade process.

Before you begin

Before proceeding with the upgrade of the Companion, ensure that you have the following:

- A functioning UMH instance, verified as “online” and in good health.

- A reliable internet connection.

- Familiarity with the changelog of the new version you are upgrading to, especially to identify any breaking changes or required manual interventions.

Management Companion

Upgrade your UMH instance seamlessly using the Management Console. Follow these steps:

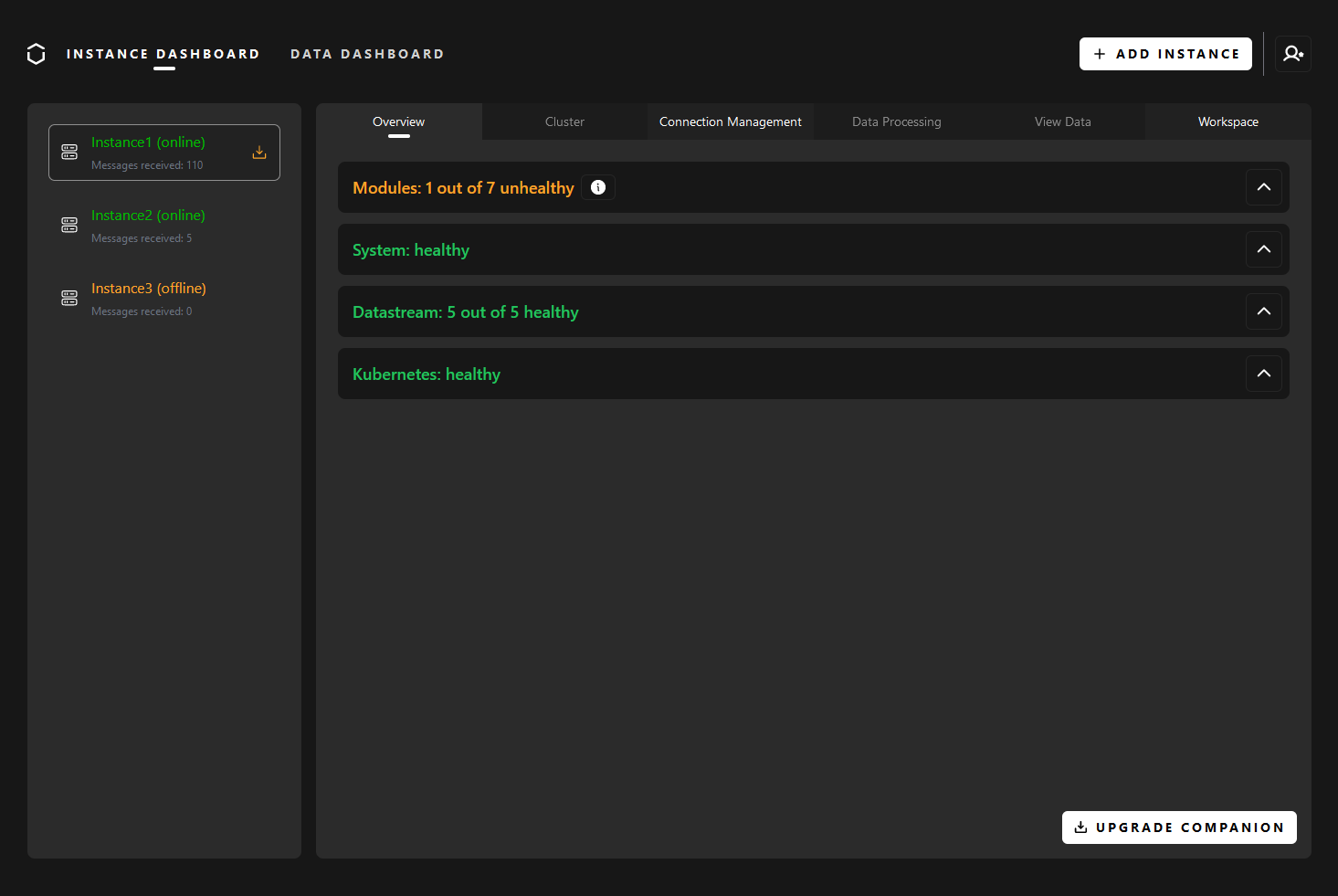

Identify Outdated Instance

From the Overview tab, check for an upgrade icon next to your instance’s name, signaling an outdated Companion version.

Additionally, locate the Upgrade Companion button at the bottom of the tab.

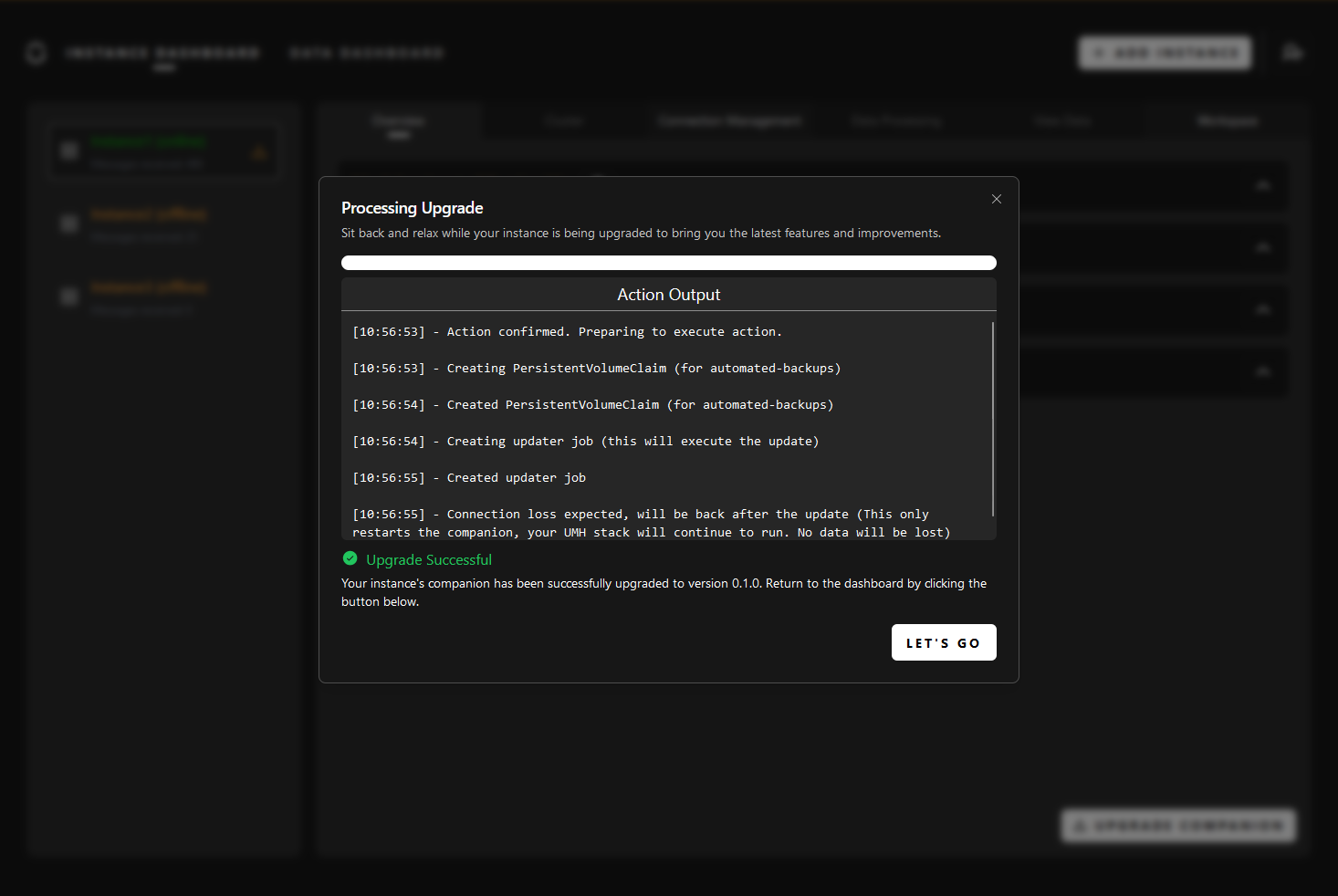

Start the Upgrade

When you’re prepared to upgrade your UMH instance, start by pressing the Upgrade Companion button. This will open a modal, initially displaying a changelog with a quick overview of the latest changes. You can expand the changelog for a detailed view from your current version up to the latest one. Additionally, it may highlight any warnings requiring manual intervention.

Navigate through the changelog, and when comfortable, proceed by clicking the Next button. This step grants you access to crucial information about recommended actions and precautions during the upgrade process.

With the necessary insights, take the next step by clicking the Upgrade button. The system will guide you through the upgrade process, displaying real-time progress updates, including a progress bar and logs.

Upon successful completion, a confirmation message will appear. Simply click the Let’s Go button to return to the dashboard,

where you can seamlessly continue using your UMH instance with the latest enhancements.

United Manufacturing Hub

As of now, the upgrade of the UMH is not yet included in the Management Console, meaning that it has to be performed manually. However, it is planned to be included in the future. Until then, you can follow the instructions in the What’s New page.

Troubleshooting

I encountered an issue during the upgrade process. What should I do?

If you encounter issues during the upgrade process, consider the following steps:

Retry the Process: Sometimes, a transient issue may cause a hiccup. Retry the upgrade process to ensure it’s not a temporary glitch.

Check Logs: Review the logs displayed during the upgrade process for any error messages or indications of what might be causing the problem. This information can offer insights into potential issues.

If the problem persists after retrying and checking the logs, and you’ve confirmed that all prerequisites are met, please reach out to our support team for assistance.

I installed the Management Companion before the 0.1.0 release. How do I upgrade it?

If you installed the Management Companion before the 0.1.0 release, you will need to reinstall it. This is because we made some changes that are not compatible with the previous version.

Before reinstalling the Management Companion, you have to backup your configuration, so that you can restore your connections after the upgrade. To do so, follow these steps:

Access your UMH instance via SSH.

Run the following command to backup your configuration:

sudo $(which kubectl) get configmap/mgmtcompanion-config --kubeconfig /etc/rancher/k3s/k3s.yaml -n mgmtcompanion -o=jsonpath='{.data}' | sed -e 's/^/{"data":/' | sed -e 's/$/}/'> mgmtcompanion-config.bak.jsonThis will create a file called

mgmtcompanion-config.bak.jsonin your current directory.For good measure, copy the file to your local machine:

scp <user>@<ip>:/home/<user>/mgmtcompanion-config.bak.json .Replace

<user>with your username, and<ip>with the IP address of your UMH instance. You will be prompted for your password.Now you can reinstall the Management Companion. Follow the instructions in the Installation guide. Your data will be preserved, and you will be able to restore your connections.

After the installation is complete, you can restore your connections by running the following command:

sudo $(which kubectl) patch configmap/mgmtcompanion-config --kubeconfig /etc/rancher/k3s/k3s.yaml -n mgmtcompanion --patch-file mgmtcompanion-config.bak.json

2.7 - Migrate to Data Model V1

In this guide, you will learn how to migrate your existing instances from the old Data Model to the new Data Model V1.

The old Data Model will continue to work, and all the data will be still available.

Before you begin

You need to have a UMH cluster. If you do not already have a cluster, you can create one by following the Getting Started guide.

You also need to access the system where the cluster is running, either by logging into it or by using a remote shell.

Upgrade Your Companion to the Latest Version

If you haven’t already, upgrade your Companion to the latest version. You can easily do this from the Management Console by selecting your Instance and clicking on the “Upgrade” button.

Upgrade the Helm Chart

The new Data Model was introduced in the 0.10 release of the Helm Chart. To upgrade to the latest 0.10 release, you first need to update the Helm Chart to the latest 0.9 release and then upgrade to the latest 0.10 release.

There is no automatic way (yet!) to upgrade the Helm Chart, so you need to follow the manual steps below.

First, after accessing your instance, find the Helm Chart version you are currently using by running the following command:

sudo $(which helm) get metadata united-manufacturing-hub -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml | grep -e ^VERSION

Then, head to the upgrading archive and follow the instructions to upgrade from your current version to the latest version, one version at a time.

2.8 - Archive

The United Manufacturing Hub is a continuously evolving product. This means that new features and bug fixes are added to the product on a regular basis. This section contains the upgrading guides for the different versions the United Manufacturing Hub.

The upgrading process is done by upgrading the Helm chart.

2.8.1 - Upgrade to v0.9.34

This page describes how to upgrade the United Manufacturing Hub to version 0.9.34. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

All the following commands are to be run from the UMH instance’s shell.

Update Helm Repo

Fetch the latest Helm charts from the UMH repository:

sudo $(which helm) repo update --kubeconfig /etc/rancher/k3s/k3s.yaml

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete deployment united-manufacturing-hub-factoryinsight-deployment united-manufacturing-hub-iotsensorsmqtt united-manufacturing-hub-opcuasimulator-deployment

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml delete statefulset united-manufacturing-hub-hivemqce united-manufacturing-hub-kafka united-manufacturing-hub-nodered united-manufacturing-hub-sensorconnect united-manufacturing-hub-mqttbridge

Upgrade Helm Chart

Upgrade the Helm chart to the 0.9.34 version:

sudo helm upgrade united-manufacturing-hub united-manufacturing-hub/united-manufacturing-hub -n united-manufacturing-hub --version 0.9.34 --reuse-values --kubeconfig /etc/rancher/k3s/k3s.yaml \

--set kafkatopostgresqlv2.enabled=false \

--set kafkatopostgresqlv2.image.repository=ghcr.io/united-manufacturing-hub/kafka-to-postgresql-v2 \

--set kafkatopostgresqlv2.image.pullPolicy=IfNotPresent \

--set kafkatopostgresqlv2.replicas=1 \

--set kafkatopostgresqlv2.resources.limits.cpu=1000m \

--set kafkatopostgresqlv2.resources.limits.memory=300Mi \

--set kafkatopostgresqlv2.resources.requests.cpu=100m \

--set kafkatopostgresqlv2.resources.requests.memory=150Mi \

--set kafkatopostgresqlv2.probes.startup.failureThreshold=30 \

--set kafkatopostgresqlv2.probes.startup.initialDelaySeconds=10 \

--set kafkatopostgresqlv2.probes.startup.periodSeconds=10 \

--set kafkatopostgresqlv2.probes.liveness.periodSeconds=5 \

--set kafkatopostgresqlv2.probes.readiness.periodSeconds=5 \

--set kafkatopostgresqlv2.logging.level=PRODUCTION \

--set kafkatopostgresqlv2.asset.cache.lru.size=1000 \

--set kafkatopostgresqlv2.workers.channel.size=10000 \

--set kafkatopostgresqlv2.workers.goroutines.multiplier=16 \

--set kafkatopostgresqlv2.database.user=kafkatopostgresqlv2 \

--set kafkatopostgresqlv2.database.password=changemetoo \

--set _000_commonConfig.datamodel_v2.enabled=true \

--set _000_commonConfig.datamodel_v2.bridges[0].mode=mqtt-kafka \

--set _000_commonConfig.datamodel_v2.bridges[0].brokerA=united-manufacturing-hub-mqtt:1883 \

--set _000_commonConfig.datamodel_v2.bridges[0].brokerB=united-manufacturing-hub-kafka:9092 \

--set _000_commonConfig.datamodel_v2.bridges[0].topic=umh.v1..* \

--set _000_commonConfig.datamodel_v2.bridges[0].topicMergePoint=5 \

--set _000_commonConfig.datamodel_v2.bridges[0].partitions=6 \

--set _000_commonConfig.datamodel_v2.bridges[0].replicationFactor=1 \

--set _000_commonConfig.datamodel_v2.database.name=umh_v2 \

--set _000_commonConfig.datamodel_v2.database.host=united-manufacturing-hub \

--set _000_commonConfig.datamodel_v2.grafana.dbreader=grafanareader \

--set _000_commonConfig.datamodel_v2.grafana.dbpassword=changeme

Update Database

There has been some changes to the database, which need to be applied. This process does not delete any data.

sudo $(which kubectl) -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml exec -it united-manufacturing-hub-timescaledb-0 -c timescaledb -- sh -c ". /etc/timescaledb/post_init.d/0_create_dbs.sh; . /etc/timescaledb/post_init.d/1_set_passwords.sh"

Restart kafka-to-postgresql-v2

sudo $(which kubectl) rollout restart deployment united-manufacturing-hub-kafkatopostgresqlv2 -n united-manufacturing-hub --kubeconfig /etc/rancher/k3s/k3s.yaml

2.8.2 - Upgrade to v0.9.15

This page describes how to upgrade the United Manufacturing Hub to version 0.9.15. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-opcuasimulator-deployment

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-grafanaproxy

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-kafka

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

- united-manufacturing-hub-mqttbridge

- Open the Network tab.

- From the Services section, delete the following services:

- united-manufacturing-hub-kafka

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

Navigate to the Helm > Releases tab.

Select the united-manufacturing-hub release and click Upgrade.

In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to.You can also change the values of the Helm chart, if needed. If you want to activate the new databridge you need to add & edit the following section

_000_commonConfig: ... datamodel_v2: enabled: true bridges: - mode: mqtt-kafka brokerA: united-manufacturing-hub-mqtt:1883 # The flow is always from A->B, for omni-directional flow, setup a 2nd bridge with reversed broker setup brokerB: united-manufacturing-hub-kafka:9092 topic: umh.v1..* # accept mqtt or kafka topic format. after the topic seprator, you can use # for mqtt wildcard, or .* for kafka wildcard topicMergePoint: 5 # This is a new feature of our datamodel_old, which splits topics in topic and key (only in Kafka), preventing having lots of topics partitions: 6 # optional: number of partitions for the new kafka topic. default: 6 replicationFactor: 1 # optional: replication factor for the new kafka topic. default: 1 ...You can also enable the new container registry by changing the values in the

imageorimage.repositoryfields from unitedmanufacturinghub/<image-name> to ghcr.io/united-manufacturing-hub/<image-name>.Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.3 - Upgrade to v0.9.14

This page describes how to upgrade the United Manufacturing Hub to version 0.9.14. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-opcuasimulator-deployment

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-grafanaproxy

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-kafka

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

- united-manufacturing-hub-mqttbridge

- Open the Network tab.

- From the Services section, delete the following services:

- united-manufacturing-hub-kafka

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

Navigate to the Helm > Releases tab.

Select the united-manufacturing-hub release and click Upgrade.

In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to.You can also change the values of the Helm chart, if needed. For example, if you want to apply the new tweaks to the resources in order to avoid the Out Of Memory crash of the MQTT Broker, you can change the following values:

iotsensorsmqtt: resources: requests: cpu: 10m memory: 20Mi limits: cpu: 30m memory: 50Mi grafanaproxy: resources: requests: cpu: 100m limits: cpu: 300m kafkatopostgresql: resources: requests: memory: 150Mi limits: memory: 300Mi opcuasimulator: resources: requests: cpu: 10m memory: 20Mi limits: cpu: 30m memory: 50Mi packmlmqttsimulator: resources: requests: cpu: 10m memory: 20Mi limits: cpu: 30m memory: 50Mi tulipconnector: resources: limits: cpu: 30m memory: 50Mi requests: cpu: 10m memory: 20Mi redis: master: resources: limits: cpu: 100m memory: 100Mi requests: cpu: 50m memory: 50Mi mqtt_broker: resources: limits: cpu: 700m memory: 1700Mi requests: cpu: 300m memory: 1000MiYou can also enable the new container registry by changing the values in the

imageorimage.repositoryfields from unitedmanufacturinghub/<image-name> to ghcr.io/united-manufacturing-hub/<image-name>.Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.4 - Upgrade to v0.9.13

This page describes how to upgrade the United Manufacturing Hub to version 0.9.13. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

- Navigate to the Helm > Releases tab.

- Select the united-manufacturing-hub release and click Upgrade.

- In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to. - You can also change the values of the Helm chart, if needed.

- Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.5 - Upgrade to v0.9.12

This page describes how to upgrade the United Manufacturing Hub to version 0.9.12. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Backup RBAC configuration for MQTT Broker

This step is only needed if you enabled RBAC for the MQTT Broker and changed the default password. If you did not change the default password, you can skip this step.

- Navigate to Config > ConfigMaps.

- Select the united-manufacturing-hub-hivemqce-extension ConfigMap.

- Copy the content of

credentials.xmland save it in a safe place.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Remove MQTT Broker extension PVC

In this version we reduced the size of the MQTT Broker extension PVC. To do so, we need to delete the old PVC and create a new one. This process will set the credentials of the MQTT Broker to the default ones. If you changed the default password, you can restore them after the upgrade.

- Navigate to Storage > Persistent Volume Claims.

- Select the united-manufacturing-hub-hivemqce-claim-extensions PVC and click Delete.

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

Navigate to the Helm > Releases tab.

Select the united-manufacturing-hub release and click Upgrade.

In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to.There are some incompatible changes in this version. To avoid errors, you need to change the following values:

Remove property

console.console.config.kafka.tls.passphrase:console: console: config: kafka: tls: passphrase: "" # <- remove this lineconsole.extraContainers: remove the property and its content.console: extraContainers: {} # <- remove this lineconsole.extraEnv: remove the property and its content.console: extraEnv: "" # <- remove this lineconsole.extraEnvFrom: remove the property and its content.console: extraEnvFrom: "" # <- remove this lineconsole.extraVolumeMounts: remove the|-characters right after the property name. It should look like this:console: extraVolumeMounts: # <- remove the `|-` characters in this line - name: united-manufacturing-hub-kowl-certificates mountPath: /SSL_certs/kafka readOnly: trueconsole.extraVolumes: remove the|-characters right after the property name. It should look like this:console: extraVolumes: # <- remove the `|-` characters in this line - name: united-manufacturing-hub-kowl-certificates secret: secretName: united-manufacturing-hub-kowl-secretsChange the

console.serviceproperty to the following:console: service: type: LoadBalancer port: 8090 targetPort: 8080Change the Redis URI in

factoryinsight.redis:factoryinsight: redis: URI: united-manufacturing-hub-redis-headless:6379Set the following values in the

kafkasection totrue, or add them if they are missing:kafka: externalAccess: autoDiscovery: enabled: true enabled: true rbac: create: trueChange

redis.architectureto standalone:redis: architecture: standaloneredis.sentinel: remove the property and its content.redis: sentinel: {} # <- remove all the content of this sectionRemove the property

redis.master.command:redis: master: command: /run.sh # <- remove this linetimescaledb-single.fullWalPrevention: remove the property and its content.timescaledb-single: fullWalPrevention: # <- remove this line checkFrequency: 30 # <- remove this line enabled: false # <- remove this line thresholds: # <- remove this line readOnlyFreeMB: 64 # <- remove this line readOnlyFreePercent: 5 # <- remove this line readWriteFreeMB: 128 # <- remove this line readWriteFreePercent: 8 # <- remove this linetimescaledb-single.loadBalancer: remove the property and its content.timescaledb-single: loadBalancer: # <- remove this line annotations: # <- remove this line service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "4000" # <- remove this line enabled: true # <- remove this line port: 5432 # <- remove this linetimescaledb-single.replicaLoadBalancer: remove the property and its content.timescaledb-single: replicaLoadBalancer: annotations: # <- remove this line service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "4000" # <- remove this line enabled: false # <- remove this line port: 5432 # <- remove this linetimescaledb-single.secretNames: remove the property and its content.timescaledb-single: secretNames: {} # <- remove this linetimescaledb-single.unsafe: remove the property and its content.timescaledb-single: unsafe: false # <- remove this lineChange the value of the

timescaledb-single.service.primary.typeproperty to LoadBalancer:timescaledb-single: service: primary: type: LoadBalancer

Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.6 - Upgrade to v0.9.11

This page describes how to upgrade the United Manufacturing Hub to version 0.9.11. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

- Navigate to the Helm > Releases tab.

- Select the united-manufacturing-hub release and click Upgrade.

- In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to. - You can also change the values of the Helm chart, if needed.

- Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.7 - Upgrade to v0.9.10

This page describes how to upgrade the United Manufacturing Hub to version 0.9.10. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Grafana plugins

In this release, the Grafana version has been updated from 8.5.9 to 9.3.1. Check the release notes for further information about the changes.

Additionally, the way default plugins are installed has changed. Unfortunatly, it is necesary to manually install all the plugins that were previously installed.

If you didn’t install any plugin other than the default ones, you can skip this section.

Follow these steps to see the list of plugins installed in your cluster:

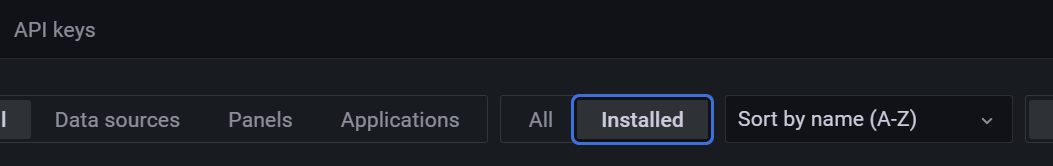

Open the browser and go to the Grafana dashboard.

Navigate to the Configuration > Plugins tab.

Select the Installed filter.

Write down all the plugins that you manually installed. You can recognize them by not having the

Coretag.The following ones are installed by default, therefore you can skip them:

- ACE.SVG by Andrew Rodgers

- Button Panel by UMH Systems Gmbh

- Button Panel by CloudSpout LLC

- Discrete by Natel Energy

- Dynamic Text by Marcus Olsson

- FlowCharting by agent

- Pareto Chart by isaozler

- Pie Chart (old) by Grafana Labs

- Timepicker Buttons Panel by williamvenner

- UMH Datasource by UMH Systems Gmbh

- Untimely by factry

- Worldmap Panel by Grafana Labs

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-grafana

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

Navigate to the Helm > Releases tab.

Select the united-manufacturing-hub release and click Upgrade.

In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to.You can also change the values of the Helm chart, if needed.

In the

grafanasection, find theextraInitContainersfield and change its value to the following:- image: unitedmanufacturinghub/grafana-umh:1.1.2 name: init-plugins imagePullPolicy: IfNotPresent command: ['sh', '-c', 'cp -r /plugins /var/lib/grafana/'] volumeMounts: - name: storage mountPath: /var/lib/grafanaMake these changes in the

kafkasection:Set the value of the

heapOptsfield to-Xmx2048m -Xms2048m.Replace the content of the

resourcessection with the following:limits: cpu: 1000m memory: 4Gi requests: cpu: 100m memory: 2560Mi

Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

Afterwards, you can reinstall the additional Grafana plugins.

Replace VerneMQ with HiveMQ

In this upgrade we switched from using VerneMQ to HiveMQ as our MQTT Broker (you can read the blog article about it).

While this process is fully backwards compatible, we suggest to update NodeRed flows and any other additional service that uses MQTT, to use the new service broker called united-manufacturing-hub-mqtt. The old united-manufacturing-hub-vernemq is still functional and, despite the name, also points to HiveMQ, but in future upgrades will be removed.

Additionally, for production environments, we recommend to enable RBAC for the MQTT Broker.

Please double-check if all of your services can connect to the new MQTT broker. It might be needed for them to be restarted, so that they can resolve the DNS name and get the new IP. Also, it can happen with tools like chirpstack, that you need to specify the client-id as the automatically generated ID worked with VerneMQ, but is now declined by HiveMQ.

Troubleshooting

Some microservices can’t connect to the new MQTT broker

If you are using the united-manufacturing-hub-mqtt service, but some microservice can’t connect to it, restarting the microservice might solve the issue. To do so, you can delete the Pod of the microservice and let Kubernetes recreate it.

ChirpStack can’t connect to the new MQTT broker

ChirpStack uses a generated client-id to connect to the MQTT broker. This

client-id is not accepted by HiveMQ. To solve this issue, you can set the

client_id field in the integration.mqtt section of the chirpstack configuration

file to a fixed value:

[integration]

...

[integration.mqtt]

client_id="chirpstack"

2.8.8 - Upgrade to v0.9.9

This page describes how to upgrade the United Manufacturing Hub to version 0.9.9. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

- Navigate to the Helm > Releases tab.

- Select the united-manufacturing-hub release and click Upgrade.

- In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to. - You can also change the values of the Helm chart, if needed.

In the

grafanasection, find theextraInitContainersfield and change the value of theimagefield tounitedmanufacturinghub/grafana-plugin-extractor:0.1.4. - Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.9 - Upgrade to v0.9.8

This page describes how to upgrade the United Manufacturing Hub to version 0.9.8. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

- Navigate to the Helm > Releases tab.

- Select the united-manufacturing-hub release and click Upgrade.

- In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to. - You can also change the values of the Helm chart, if needed.

- Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

2.8.10 - Upgrade to v0.9.7

This page describes how to upgrade the United Manufacturing Hub to version 0.9.7. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).

Click on the Kubernetes tab and check if the Helm Chart section contains

the https://repo.umh.app repository.

If it doesn’t, click the Add Custom Helm Repo button and fill in the following values:

- Helm repo name: united-manufacturing-hub

- URL: https://repo.umh.app

Then click Add.

Clear Workloads

Some workloads need to be deleted before upgrading. This process does not delete any data, but it will cause downtime. If a workload is missing, it means that it was not enabled in your cluster, therefore you can skip it.

To delete a resource, you can select it using the box on the left of the resource name and click the - button on the bottom right corner.

- Open the Workloads tab.

- From the Deployment section, delete the following deployments:

- united-manufacturing-hub-barcodereader

- united-manufacturing-hub-factoryinsight-deployment

- united-manufacturing-hub-kafkatopostgresql

- united-manufacturing-hub-mqttkafkabridge

- united-manufacturing-hub-iotsensorsmqtt

- united-manufacturing-hub-opcuasimulator-deployment

- From the StatefulSet section, delete the following statefulsets:

- united-manufacturing-hub-mqttbridge

- united-manufacturing-hub-hivemqce

- united-manufacturing-hub-nodered

- united-manufacturing-hub-sensorconnect

Upgrade Helm Chart

Now everything is ready to upgrade the Helm chart.

- Navigate to the Helm > Releases tab.

- Select the united-manufacturing-hub release and click Upgrade.

- In the Helm Upgrade window, make sure that the

Upgrade versionfield contains the version you want to upgrade to. - You can also change the values of the Helm chart, if needed.

Make these changes in the

grafanasection:Replace the content of

datasourceswith the following:datasources.yaml: apiVersion: 1 datasources: - access: proxy editable: false isDefault: true jsonData: apiKey: $FACTORYINSIGHT_PASSWORD apiKeyConfigured: true customerId: $FACTORYINSIGHT_CUSTOMERID serverURL: http://united-manufacturing-hub-factoryinsight-service/ name: umh-datasource orgId: 1 type: umh-datasource url: http://united-manufacturing-hub-factoryinsight-service/ version: 1 - access: proxy editable: false isDefault: false jsonData: apiKey: $FACTORYINSIGHT_PASSWORD apiKeyConfigured: true baseURL: http://united-manufacturing-hub-factoryinsight-service/ customerID: $FACTORYINSIGHT_CUSTOMERID name: umh-v2-datasource orgId: 1 type: umh-v2-datasource url: http://united-manufacturing-hub-factoryinsight-service/ version: 1Replace the content of

envwith the following:GF_PLUGINS_ALLOW_LOADING_UNSIGNED_PLUGINS: umh-datasource,umh-factoryinput-panel,umh-v2-datasourceReplace the content of

extraInitContainerswith the following:- name: init-umh-datasource image: unitedmanufacturinghub/grafana-plugin-extractor:0.1.3 volumeMounts: - name: storage mountPath: /var/lib/grafana imagePullPolicy: IfNotPresent

In the

timescaledb-singlesection, make sure that theimage.tagfield is set to pg13.8-ts2.8.0-p1.

- Click Upgrade.

The upgrade process can take a few minutes. The upgrade is complete when the Status field of the release is Deployed.

Change Factoryinsight API version

The Factoryinsight API version has changed from v1 to v2. To make sure that

you are using the new version, click on any Factoryinsight Pod and check that the

VERSION environment variable is set to 2.

If it’s not, follow these steps:

2.8.11 - Upgrade to v0.9.6

This page describes how to upgrade the United Manufacturing Hub to version 0.9.6. Before upgrading, remember to backup the database, Node-RED flows, and your cluster configuration.

Add Helm repo in UMHLens / OpenLens

Check if the UMH Helm repository is added in UMHLens / OpenLens.

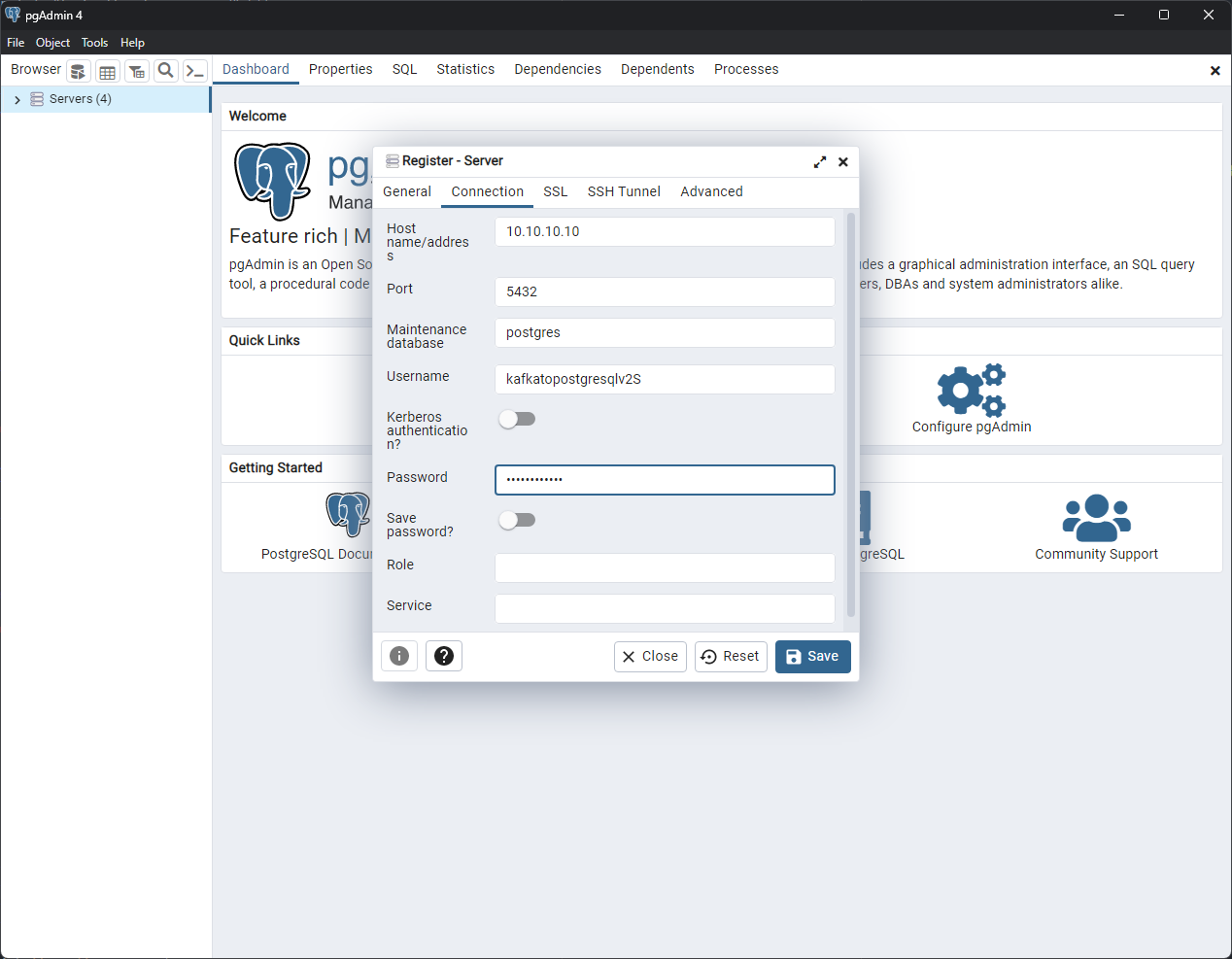

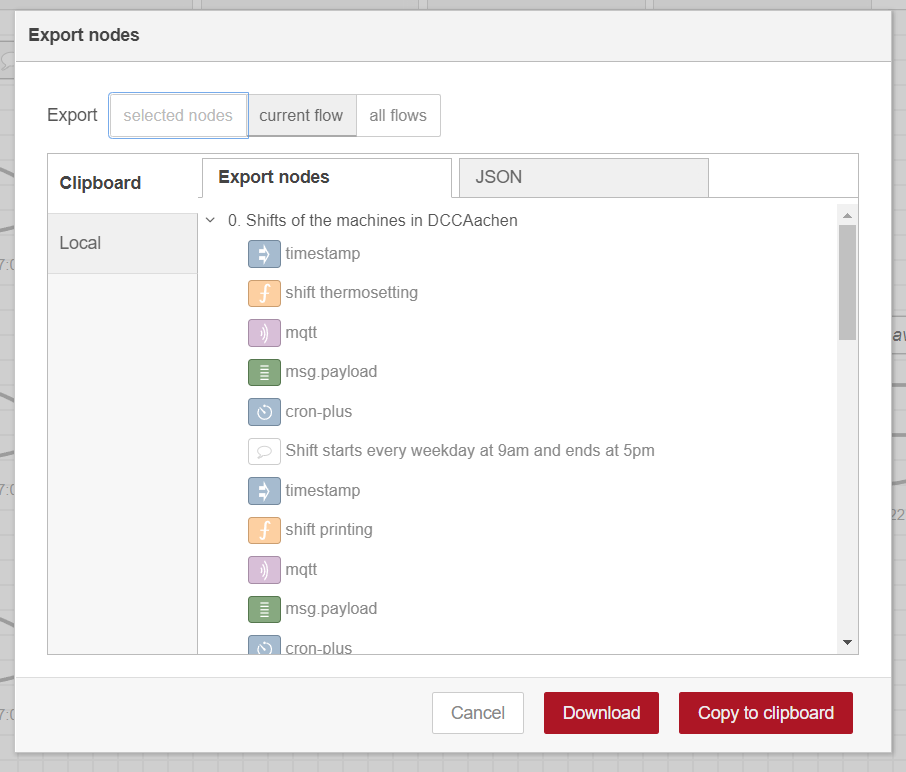

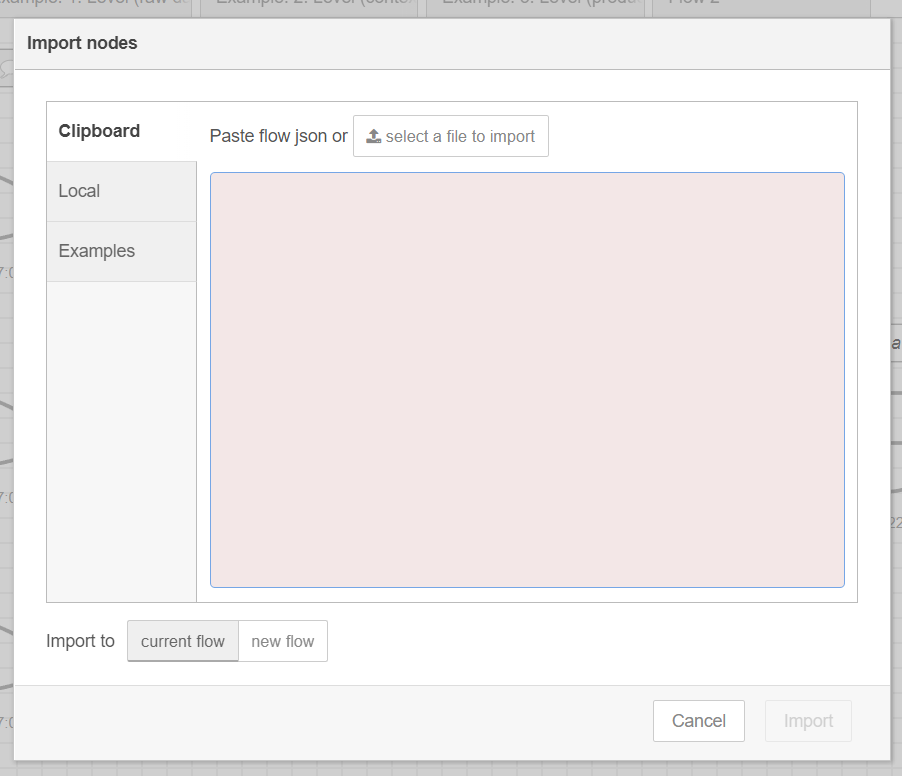

To do so, from the top-left menu, select FIle > Preferences (or press CTRL + ,).